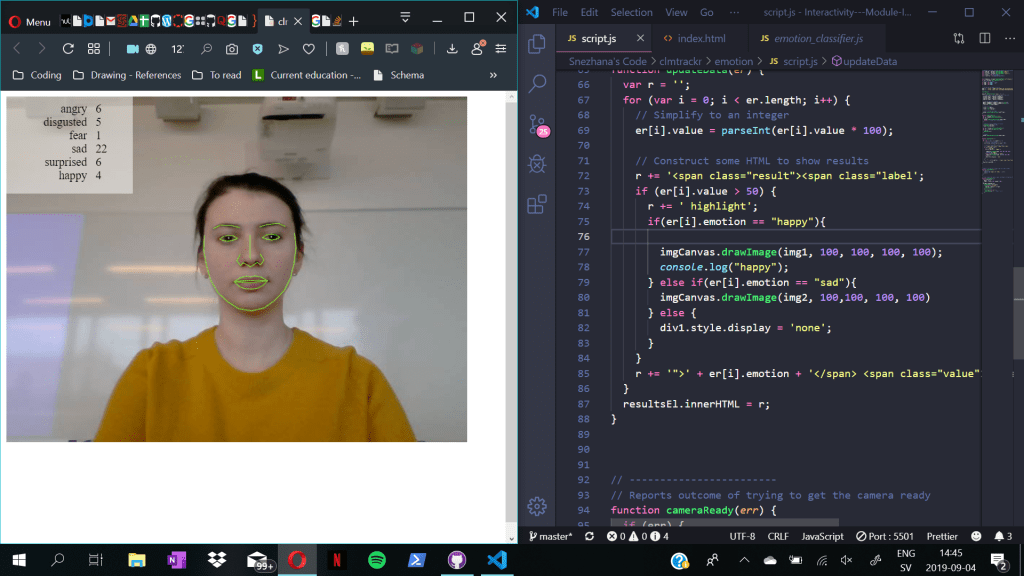

After the last coaching session, the focus was moved from the pixel and frame examples to the pose examples with the library posenet. Since the feedback and the previous sketches led us to a dead-end where we were not sure what are we trying to achieve, this seemed like a fresh start where we can work with different type of interaction. Indirect interaction with bodies.

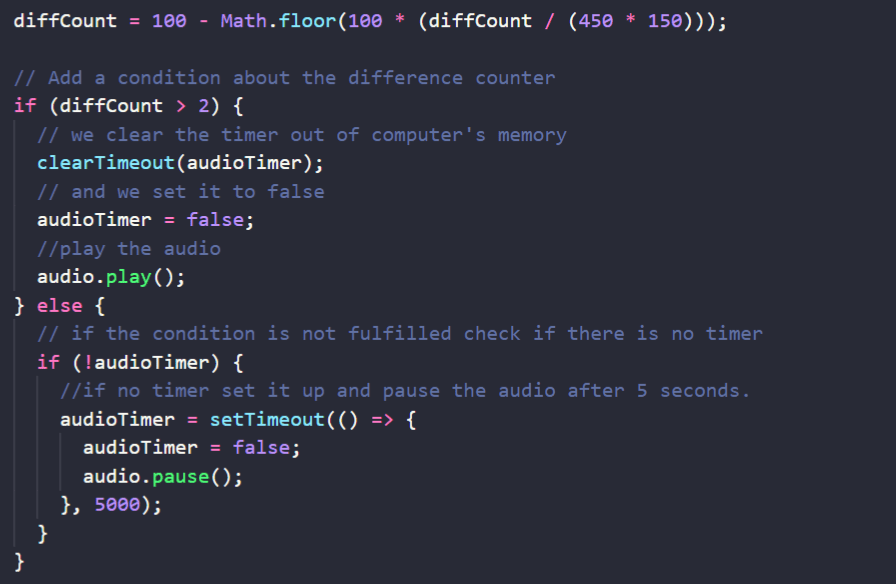

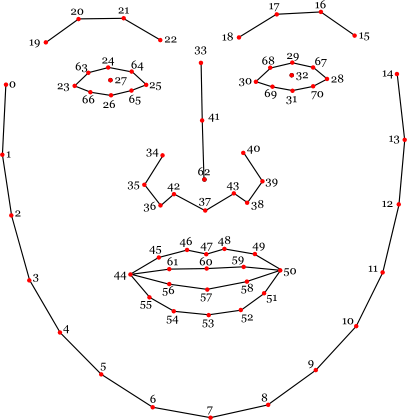

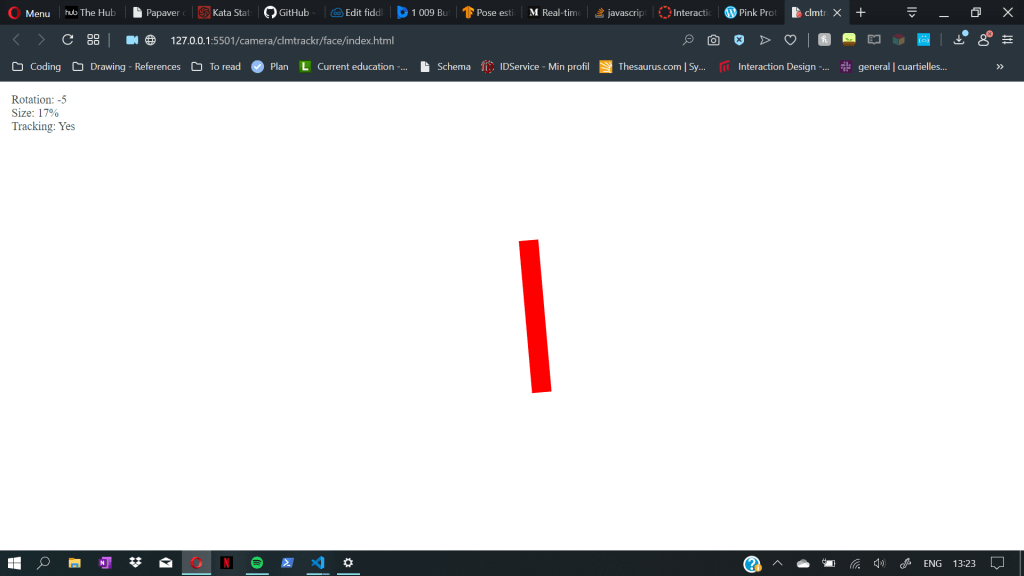

The new example was supposed to detect people when they show up on the camera, and detect their wrist which can be used as a controller. The sketch we created was changing the pixels of the “rgb” spectrum depending on the distance between the wrists. It looked “cool” but it still felt as if we are not on the right track by not knowing what exactly are we working towards.

Reading through the already done code, and figuring out what was the goal of the project felt like very difficult and frustrating since we did not have much time left to create a good sketch. We also asked other students, if someone had better understanding of the topic, but that did not show much result.

Supervision /w Jens

This Thursday we met up with Jens for our coaching session. Since we were okay code -wise with our sketches, we focused more on asking more question about what are we supposed to work towards to. Jens explained to us that we should create an interactive experience in a sense from using the sketch but without the ability to control the system directly and intentionally. It is important to break the link between the directness and the input and output. For example naturally if there is a button and that can be pressed, the expected is to face some kind of output or feedback. He explained that we should try to create something that is not too direct with the interaction and it is harder to understand how the system is being controlled. He also suggested to try and brainstorm again to get some sketches on paper and try to be more creative in non direct interaction.