Since the fail of the first sketch, we decided to continue exploring the sketches individually. The next sketch I worked with was detecting the distance between the face and the camera. The rotation factor of the user’s head. It works based on the face points from the library. It’s a different example from the same library as the previous one called clmtrackr.

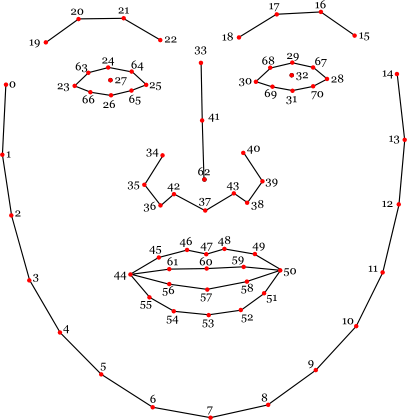

The distance between the screen and the user is being calculated by getting the highest and the lowest points of the face for the vertical height, and for the horizontal we take the two points that are the furthest apart. In our case that is the 33 and 7 for the vertical distance and the 1 and 13 for the horizontal distance. Then we calculate the middle of both of these distances getting the overall distance from the camera.

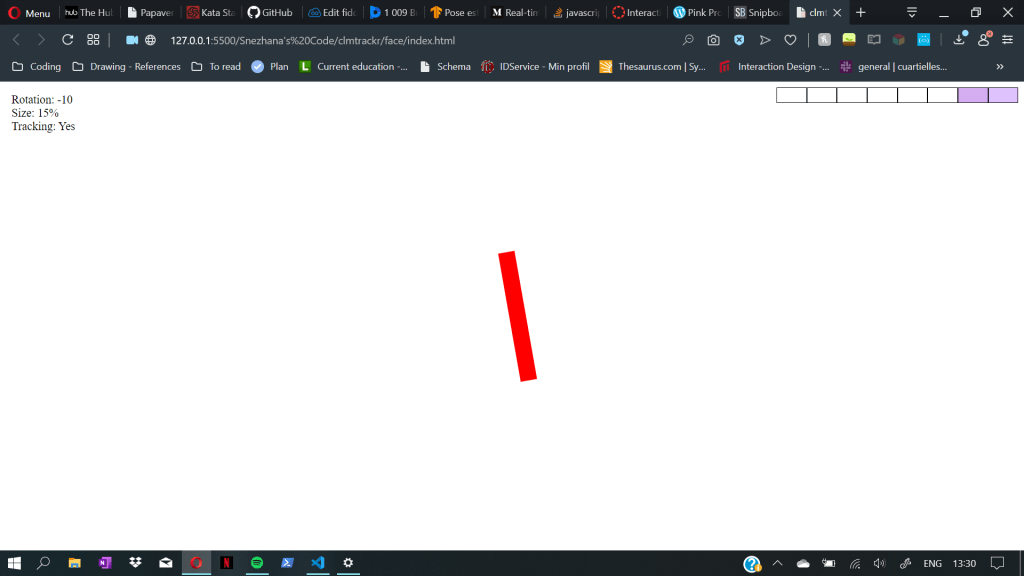

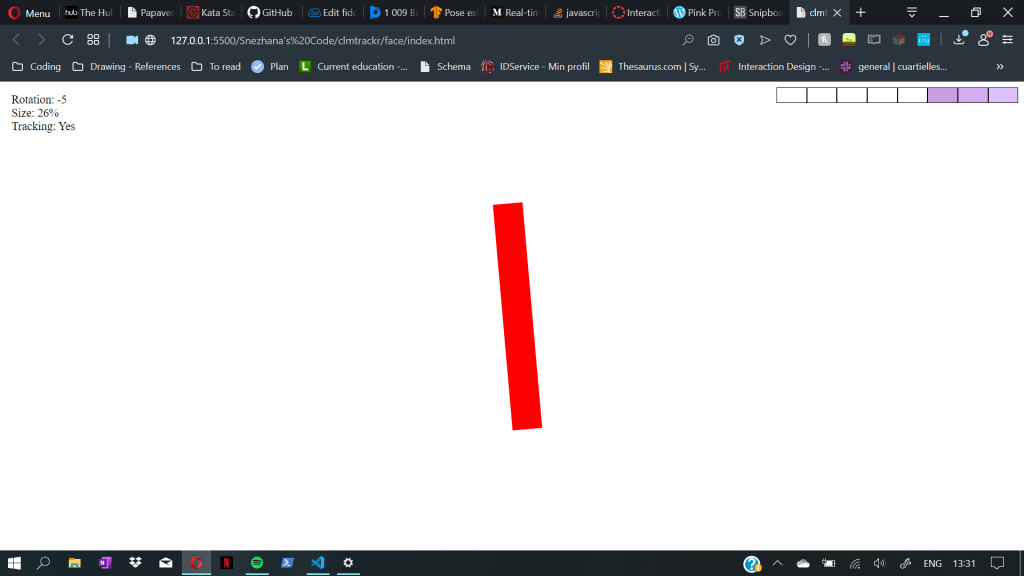

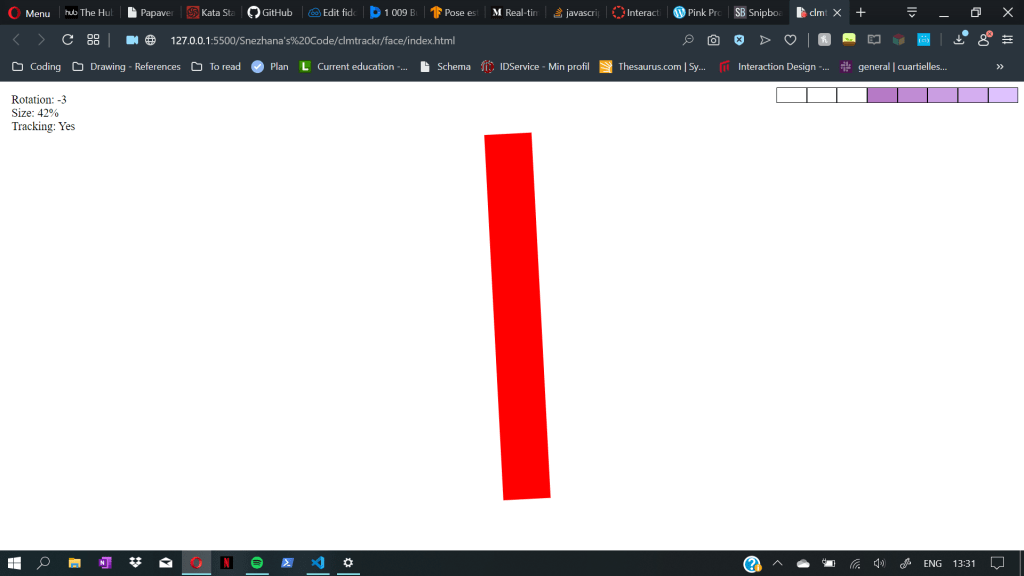

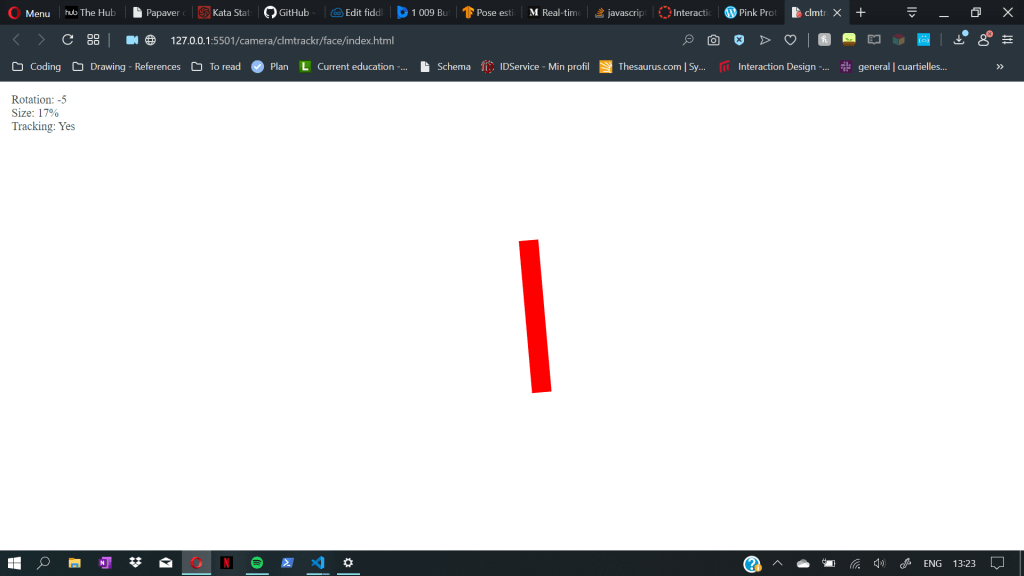

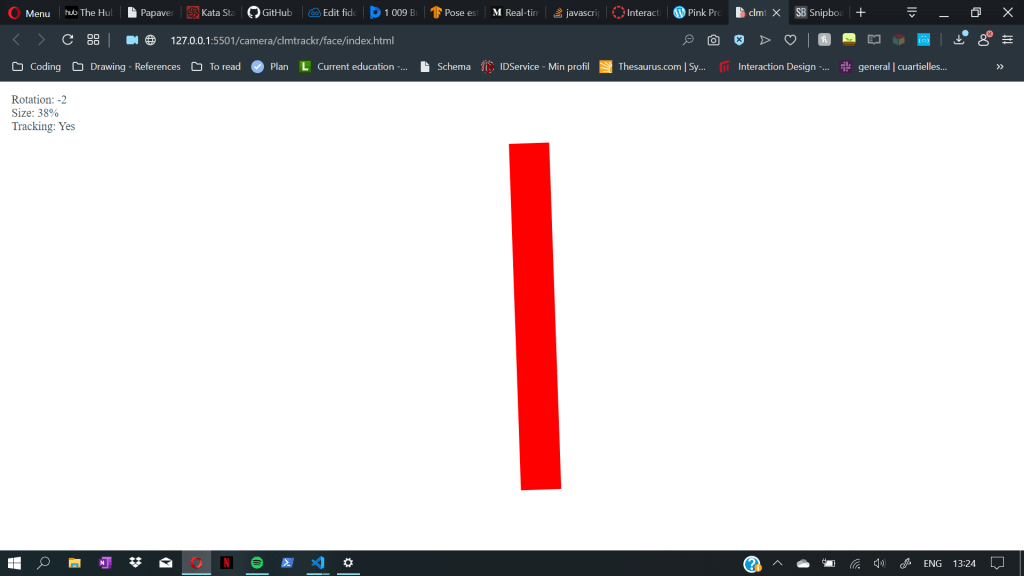

That distance is being presented with a line whose size is changing simultaneously with the movement of the face. When the face is farthest away the line is smallest and it increases sizes as we get closer.

Further away from the camera

Closer to the camera

I worked on two iterations on this code. The first one was an audio controller based on the rotation factor and the distance of the head. The second one was just adding indicator about the distance and the volume of the song.

For the indicator i decided to reuse code from one of the previous programming courses. It saved me a lot of time and left more space to work with the code instead of writing the same old functions again.

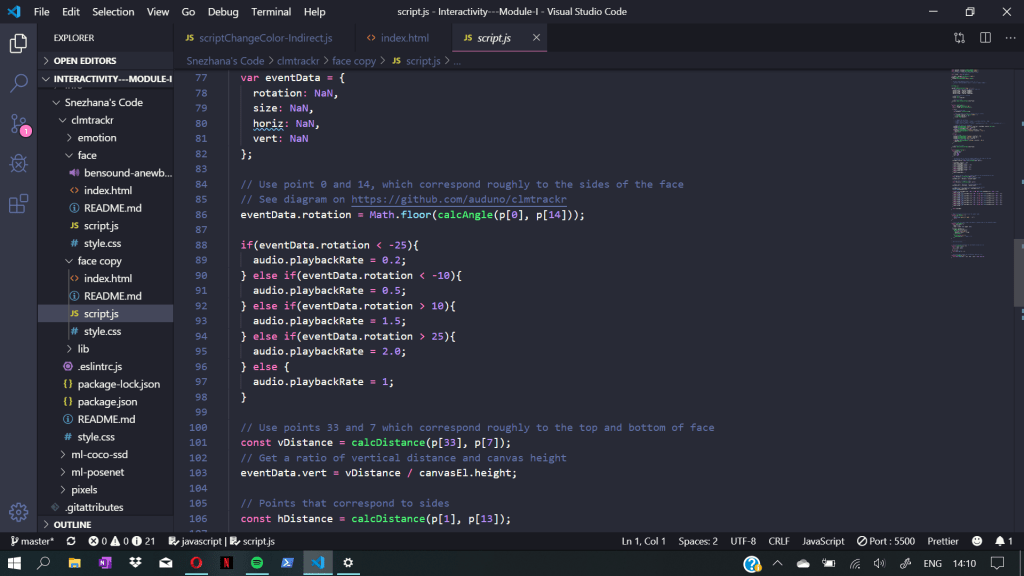

In the first iteration the code controlled the volume of the music and its speed. The speed of the sound-play was controlled based on the head rotation number. The code detect the data gathered from the face points and loops it through a series of if – conditions in order to pick the right speed. In case non of those conditions are true it plays the sound in its normal speed.

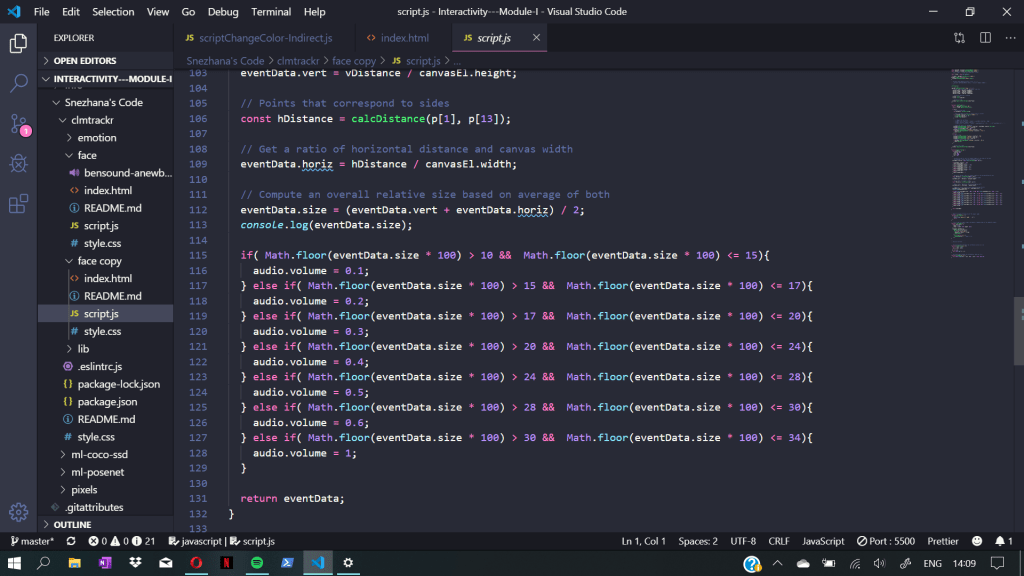

The next If statement that loops through the gathered data is the volume controller. After the size of the face is calculated it checks between which range it belongs to, the volume its being set accordingly.

Coaching Session /w Clint

For the coaching today, we met with Clint in order for him to give us some kind of feedback on the tinkering of the code so far. We presented out sketches and asked questions about the topic more in depth. One of the main conflict for us with this project was the feeling of not knowing exactly what are the sketches supposed to present and do. As a team we were confused of how to approach the subject and to overcome this problem.

His explanation was somewhat helpful. He used practical example of comparing the interaction with the system in the following way:

If there is camera at the corner of the room that detects people present in the room, and we create a system where it reacts based on the number of people wearing blue shirts, it creates in a way indirect interaction. The number of people with blue shirts will control the lightning in the room, but they will not be able to manipulate the system that easily.