Re-exam (underlined text is part of the re-exam)

As we were split into groups of two, me and my teammate decided to split the tasks and try out tinkering out different pieces of code to achieve some kind of interaction through the camera. The reason for it was mostly to test out as many sketches as possible, in the exploring stage of the project.

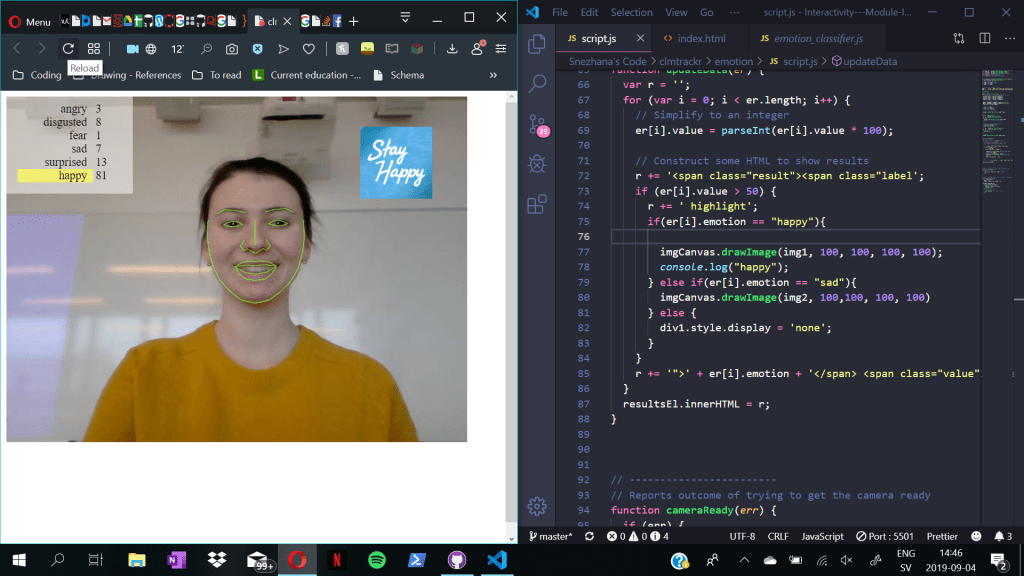

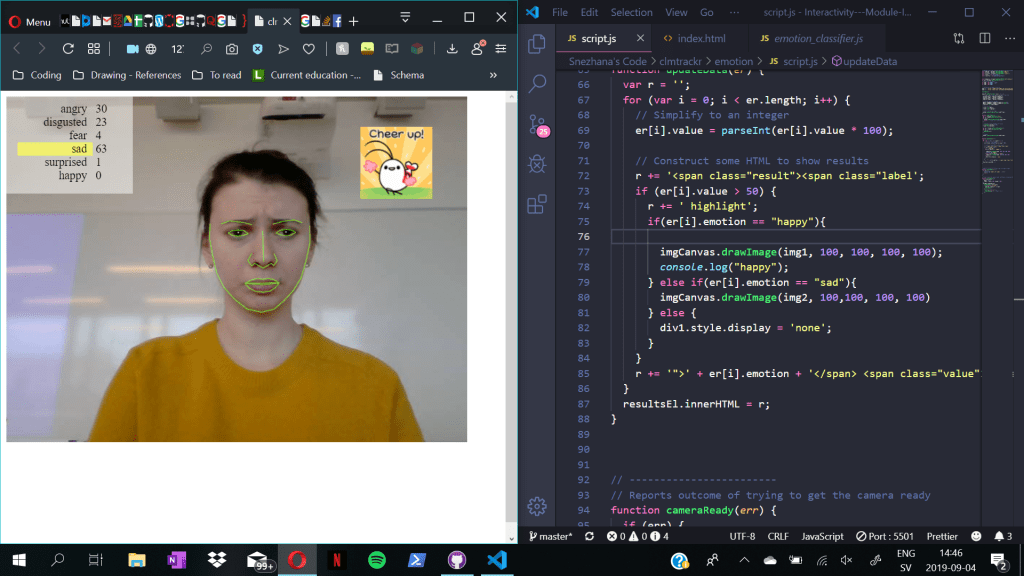

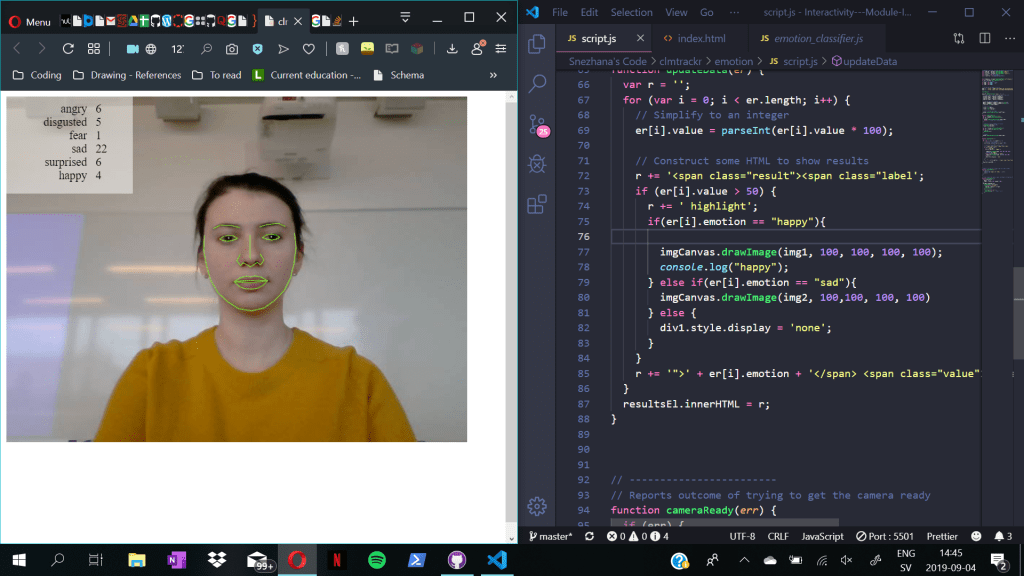

One of the first sketches I started working with, was with the library called clmtrackr. The sketch in particular was based on the emotions of the face that is being registered in the camera. For example if the person is smiling, depending on a certain points that are being set from the library it would be detected and registered in the top left corner.

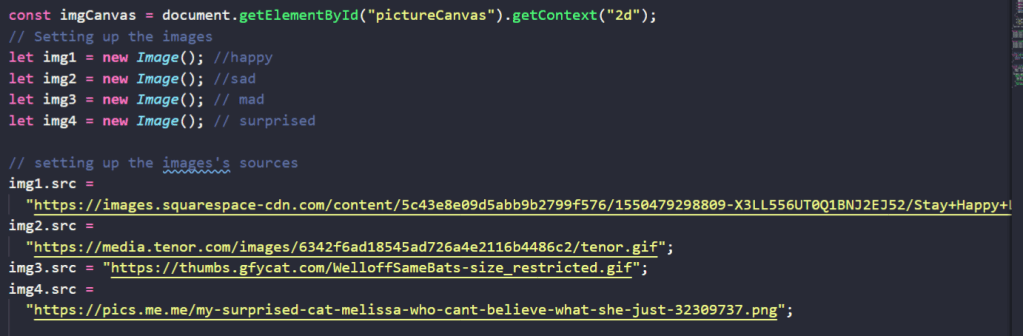

For my tryout to work with the data that the camera is receiving I decided to imput some kind of reaction pictures based on the face of the user. Within the html I created a new div which is going to contain the images that are being added. Then through out the DOM elements I connect the div with the images and their sources.

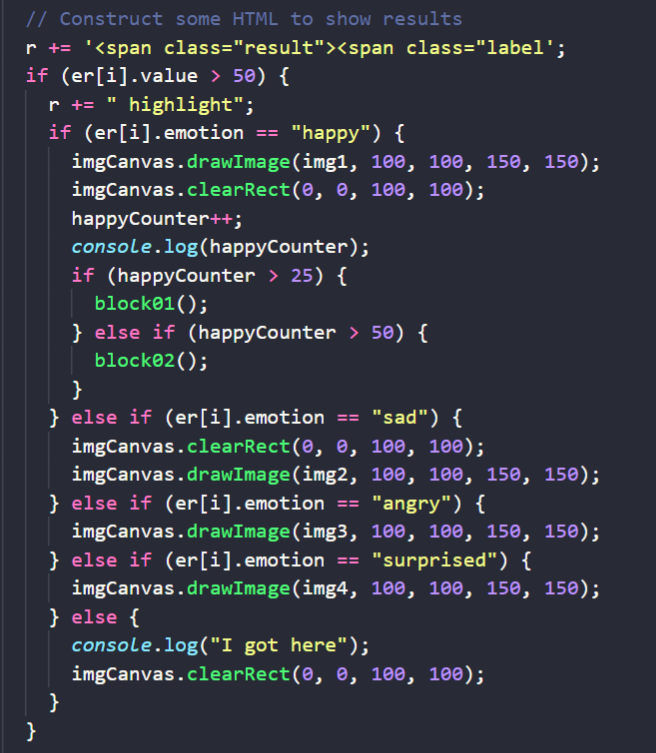

Then I continued by adding different conditions through out an “if – statements” which detect which emotion is being processed throughout the code. The way it works, is that the library detects the data and it’s number. In case it’s bigger than 50 the emotion is being highlighted, and then through out conditions it’s being checked if the highlighted emotion is a certain string.

If the emotion it is for example “happy” the picture with the text “stay happy” will show up on the screen. Or if the emotion is sad, the canvas will draw a “cheer up” image!

But since this example was mostly based on direct interaction which can be manipulated by the person, we decided not to go through with it.